Next: Principal components: HMM-PCA

Up: Hidden Markov Models, etc.

Previous: Autoregressive observations: HMM-AR

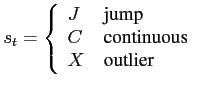

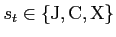

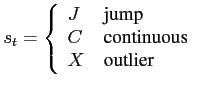

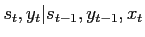

Outlier cleaning of time-series requires an extension of HMM-AR, section 7: Observations are the continuos variables  . A discrete state variable

. A discrete state variable

represents the state of observation

represents the state of observation

|

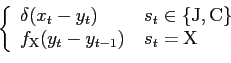

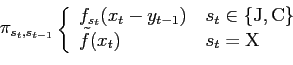

(35) |

and a second hidden variable  denotes the ``shadow path'' which needs to be tracked

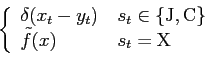

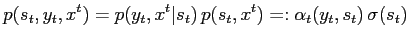

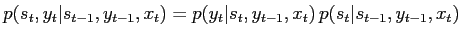

when an observed quantity is identified as an outlier. The conditional probabilities are:

denotes the ``shadow path'' which needs to be tracked

when an observed quantity is identified as an outlier. The conditional probabilities are:

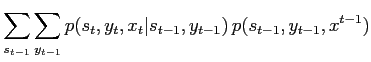

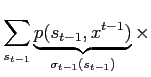

It is useful to re-factorise formulate the state probability

|

(37) |

because then the forward recursion becomes

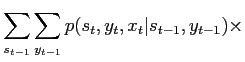

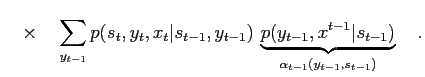

By re-factorising the update probability

|

(41) |

it is possible to sample

because the two terms have a simple form:

because the two terms have a simple form:

Fig.:

HMM-clean conditional dependencies.

|

|

Next: Principal components: HMM-PCA

Up: Hidden Markov Models, etc.

Previous: Autoregressive observations: HMM-AR

Markus Mayer

2009-06-22

![]() . A discrete state variable

. A discrete state variable

![]() represents the state of observation

represents the state of observation ![]()